Testing a single voxel#

A short ago — Modeling a single voxel, we were modeling a single voxel time course.

Let’s get that same voxel time course back again:

import numpy as np

import matplotlib.pyplot as plt

# Print array values to 4 decimal places

np.set_printoptions(precision=4)

import nibabel as nib

import nipraxis

# Fetch the data file

bold_fname = nipraxis.fetch_file('ds114_sub009_t2r1.nii')

img = nib.load(bold_fname)

data = img.get_fdata()

data = data[..., 4:]

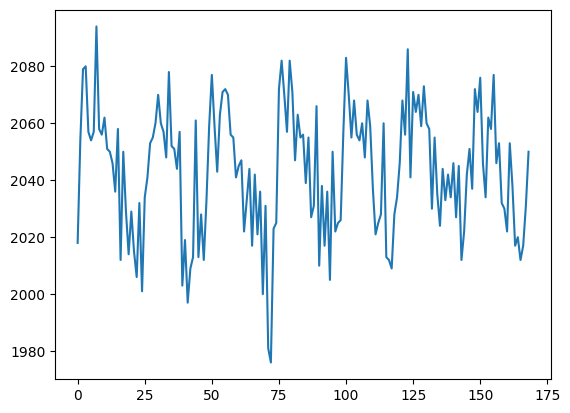

The voxel coordinate (3D coordinate) that we were looking at in Voxel time courses was at (42, 32, 19):

voxel_time_course = data[42, 32, 19]

plt.plot(voxel_time_course)

[<matplotlib.lines.Line2D at 0x7fca9c9485b0>]

We then compiled a design for this time-course and estimated it.

We used the convolved regressor from Convolving with the hemodyamic response

function in a simple regression.

conv_reg_fname = nipraxis.fetch_file('ds114_sub009_t2r1_conv.txt')

conv_reg_fname

'/home/runner/.cache/nipraxis/0.5/ds114_sub009_t2r1_conv.txt'

convolved = np.loadtxt(conv_reg_fname)

# Knock off first 4 elements to match data

convolved = convolved[4:]

N = len(convolved)

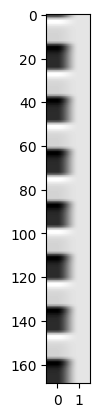

X = np.ones((N, 2))

X[:, 0] = convolved

plt.imshow(X, cmap='gray', aspect=0.1)

<matplotlib.image.AxesImage at 0x7fca9c9bfeb0>

\(\newcommand{\yvec}{\vec{y}}\) \(\newcommand{\xvec}{\vec{x}}\) \(\newcommand{\evec}{\vec{\varepsilon}}\) \(\newcommand{Xmat}{\boldsymbol X} \newcommand{\bvec}{\vec{\beta}}\) \(\newcommand{\bhat}{\hat{\bvec}} \newcommand{\yhat}{\hat{\yvec}}\)

As you will remember from introduction to the general linear model, our model is:

We can get our least squares parameter estimates for \(\bvec\) with:

where \(\Xmat^+\) is the pseudoinverse of \(\Xmat\). When \((\Xmat^T \Xmat)\) is invertible, the pseudoinverse is given by:

We find the \(\bhat\) for our data and design:

import numpy.linalg as npl

Xp = npl.pinv(X)

beta_hat = Xp @ voxel_time_course

beta_hat

array([ 31.1855, 2029.3677])

Our plan now is to do an hypothesis test on our \(\bhat\) values.

The \(\bhat\) values are sample estimates of the unobservable true \(\bvec\) parameters.

Because the \(\bhat\) values are sample estimates, the values we have depend on the particular sample we have, and the particular instantiation of the random noise (residuals). If we were to take another set of data from the same voxel during the same task, we would get another estimate, because there would be different instantiation of the random noise. It’s possible to show that the variance / covariance of the \(\hat\beta\) estimates is:

where \(\sigma^2\) is the true unknown variance of the errors. See wikipedia proof, and stackoverflow proof.

We can use an estimate \(\hat\sigma^2\) of \(\sigma^2\) to give us estimated standard errors of the variance covariance (see: Unbiased estimate of population variance):

y = voxel_time_course

y_hat = X @ beta_hat

residuals = y - y_hat

# Residual sum of squares

RSS = np.sum(residuals ** 2)

# Degrees of freedom: n - no independent columns in X

df = X.shape[0] - npl.matrix_rank(X)

# Mean residual sum of squares

MRSS = RSS / df

# This is our s^2

s2_hat = MRSS

print(s2_hat)

print(np.sqrt(s2_hat))

247.93757096349455

15.746033499376741

We now have an standard estimate of the variance / covariance of the \(\bhat\):

v_cov = s2_hat * npl.inv(X.T @ X)

In particular, I can now divide my estimate for the first parameter, by the standard error of that estimate:

numerator = beta_hat[0]

denominator = np.sqrt(v_cov[0, 0])

t_stat = numerator / denominator

print(t_stat)

12.826780099049788

I can look up the probability of this t statistic using scipy.stats:

from scipy.stats import t as t_dist

# Get p value for t value using cumulative density dunction

# (CDF) of t distribution

ltp = t_dist.cdf(t_stat, df) # lower tail p

p = 1 - ltp # upper tail p

p

0.0

Compare our manual estimation to R#

Finally let’s save the voxel time course for us to compare this analysis to

the lm estimation in R:

np.savetxt('voxel_time_course.txt', voxel_time_course)

Here are the commands to run the same analysis in R:

# Simple regression model in R

# Load the voxel time course

voxels = read.table('voxel_time_course.txt')$V1

# Load the convolved regressor

convolved = read.table('ds114_sub009_t2r1_conv.txt')$V1

# Drop the first four values to correspond to the data

convolved = convolved[-(1:4)]

# Fit linear model

res = lm(voxels ~ convolved)

print(summary(res))

The result from the R script is:

Call:

lm(formula = voxels ~ convolved)

Residuals:

Min 1Q Median 3Q Max

-53.368 -9.747 0.253 9.353 40.579

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 2029.368 1.635 1241.40 <2e-16 ***

convolved 31.186 2.431 12.83 <2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 15.75 on 167 degrees of freedom

Multiple R-squared: 0.4963, Adjusted R-squared: 0.4933

F-statistic: 164.5 on 1 and 167 DF, p-value: < 2.2e-16